MY Cloud Resume Challenge Experience

Introduction to the Challenge

The objective of the Cloud Resume Challenge is to help participants build and demonstrate fundamental skills related to cloud computing and to acquire the necessary skills required to succeed as AWS cloud engineer. The challenge consists of two parts, a frontend and a backend. The frontend consists of a website hosting a resume, which is continuously deployed to AWS s3 bucket and CloudFront CDN through CI/CD pipeline running on GitHub Actions. The backend infrastructure consists of a backend CI/CD pipeline that runs on GitHub action and automates the deployment of AWS SAM/CloudFormation artifacts. A REST API triggering a python-based Lambda function is required to update a DynamoDB table created in AWS. The challenge requires participants to test the python code using pytest framework. Click link for details of this challenge. The sections below present my experience in the cloud resume challenge.

How and when I discovered the Cloud Resume Challenge

I was introduced to the Cloud Resume Challenge by a friend, right after passing the AWS Certified Practitioner and AWS Certified Solutions Architect-Associate. I accepted the challenge and quickly read through the steps of the project. On the surface, all the steps appeared straightforward, and I was confident that I could complete the entire challenge in one weekend. Long story short, it took me a longer than anticipated.

Frontend

I started the challenge working on the frontend. The frontend was relatively easy to deal with because of my prior knowledge in HTML and CSS, plus I was already comfortable with GitHub. A good course to help with the frontend is HTML, CSS and JavaScript . I took this course about a year ago and I did not have many issues with the HTML and CSS. For my resume format and structure, I edited a template to fit my needs.

Backend

The backend proved challenging, especially the SAM and the entire Infrastructure as Code (IaC) as a whole. I was very familiar with the AWS resources in the challenge and very comfortable creating these resources in the AWS console. However, using Infrastructure as Code to provision these resources proved challenging. I spent majority of my time working on the backend. I enrolled in AWS Lambda and Serverless Architecture Bootcamp , which introduced me to serverless architecture and Infrastructure as Code in general and gave me some tools to get started. Additionally, I conducted extensive research on unfamiliar serverless concepts and used AWS documentations, and several platforms including stack overflow and asking friends, whenever I got stack.

Automation

For automation and CI/CD pipeline, I used GitHub Actions for both the frontend and the backend. The GitHub Actions workflow runs on Linux, executing bash scripts. I created and securely configured Github Actions such that on every push of my local code to the master branch of the frontend repository, the actions CI/CD is triggered, and the workflow is executed. When the workflow is executed, the s3 bucket is automatically updated. The workflow for the backend CI/CD pipeline involved Setting up the job, Checkout, Installing Python Dependencies, Unit Testing, SAM Build, and Deploy. SAM template Code is only built and deployed after the Python-based Lambda function passed the Unit tests.

DNS, Hosting, CDN, SSL Certificate

I registered a domain and created SSL certificate in route 53 and AWS Certificate Manager respectively. You are free to use any service to register your domain. I chose AWS route 53 to keep everything within AWS ecosystem. It is also simple and easy hooking up DNS to CloudFront distribution being in the same ecosystem.

API Gateway, Lambda and DynamoDB

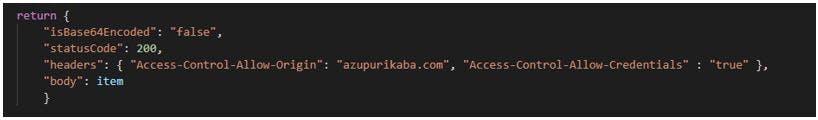

In this section, I created a Restful API that communicates with a lambda function. When the HTTP API is invoked, API Gateway routes the request to the python-based Lambda function. The Lambda function, configured with an IAM role capable of interacting with API and DynamoDB, then interacts with DynamoDB to update the dynamodb table and returns a response to API Gateway. API Gateway then returns a response in the form of number of website visits. One of the problems I encountered in this section was getting these services to work together, especially, the API. I had earlier on read that CORS is a common problem with APIs, and I spent a lot of time reading and researching CORS. The snippet below shows a portion of my python function that puts and updates the DynamoDB table. It turns out that I omitted https in the access-control-allow-origin value.

Conclusion

Honestly, the project was very challenging, but it was exactly what I needed to practice my newly acquired knowledge in cloud computing. I had to research extensively to find solutions to the challenge, which am very glad I did. I discovered several platforms, portals, AWS documentations that were very useful and will continue to be helpful in my career. Most importantly, I learnt to integrate AWS resources/services to develop a complete application. I learnt infrastructure as code, application security and networking, serverless architecture, services automation, and CI/CD as well as how to integrate unit testing into the workflow pipeline. Here is the product of the challenge. I will definitely recommend this challenge to anyone new to AWS cloud seeking a career in cloud computing or individuals looking for interesting weekend project. The challenge nicely integrates AWS resources, and I really enjoyed working on it.